Here's a comprehensive article on the history of computers, complete with suggested headings for images and graphs/charts.

# The Evolution of Computers: A Historical Perspective

## Introduction

The evolution of computers has been a fascinating journey, marked by technological advancements that have reshaped the way we live and work. From the early mechanical devices to today's sophisticated quantum computers, the history of computers reflects human ingenuity and innovation.

---

## 1. Early Mechanical Devices

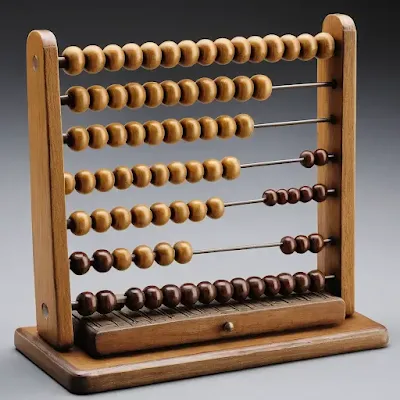

The concept of computing can be traced back to ancient times. The abacus, developed around 500 BC, is one of the earliest known computing devices. It allowed users to perform basic arithmetic operations using beads on rods.

### Image Suggestion: Abacus Display

*An image showing different types of abacuses used in various cultures.*

---

## 2. The Analytical Engine

In the 1830s, Charles Babbage conceptualized the Analytical Engine, a mechanical general-purpose computer. Though never completed, it introduced fundamental concepts such as the use of an arithmetic logic unit, control flow through conditional branching, and memory.

### Image Suggestion: Diagram of the Analytical Engine

*A diagram illustrating the components of Babbage's Analytical Engine.*

---

## 3. The First Programmers

Ada Lovelace, often regarded as the first computer programmer, worked on the Analytical Engine. She recognized its potential beyond mere calculation, envisioning applications in fields like music and art.

### Image Suggestion: Portrait of Ada Lovelace

*A historical portrait of Ada Lovelace, highlighting her contributions to computing.*

---

## 4. The Electronic Era Begins

The 20th century marked the transition from mechanical to electronic computing. The ENIAC (Electronic Numerical Integrator and Computer), developed in 1945, was one of the first electronic general-purpose computers. It used vacuum tubes and could perform thousands of calculations per second.

### Graph Suggestion: ENIAC Performance Comparison

*A graph comparing the performance of ENIAC with modern computers, showcasing its speed limitations.*

---

## 5. The Birth of Microprocessors

In the early 1970s, the invention of the microprocessor revolutionized computing. Intel's 4004, released in 1971, was the first commercially available microprocessor. This development led to the miniaturization of computers and the rise of personal computing.

### Image Suggestion: Intel 4004 Microprocessor

*An image of the Intel 4004 microprocessor, highlighting its compact design.*

---

## 6. The Personal Computer Revolution

The late 1970s and early 1980s saw the emergence of personal computers (PCs). Companies like Apple, IBM, and Commodore introduced user-friendly systems, making computers accessible to the masses. The Apple II, launched in 1977, is often credited with popularizing the personal computer.

### Graph Suggestion: PC Sales Growth Over the Years

*A line graph showing the growth in personal computer sales from the late 1970s to the early 2000s.*

---

## 7. The Internet Age

The 1990s ushered in the era of the internet, fundamentally changing how computers were used. The World Wide Web, invented by Tim Berners-Lee, allowed for the sharing of information across networks. This interconnectedness has since become a cornerstone of modern computing.

### Image Suggestion: Internet Usage Growth Chart

*A chart showing the exponential growth of internet users from the early 1990s to the present day.*

---

## 8. Mobile Computing and Smartphones

The introduction of smartphones in the late 2000s further transformed the computing landscape. Devices like the iPhone integrated powerful computing capabilities into a portable format, allowing users to access information and communicate on the go.

### Image Suggestion: Evolution of Smartphones

*An infographic showcasing the evolution of smartphones over the years.*

---

## 9. The Rise of Artificial Intelligence

In recent years, artificial intelligence (AI) has become a significant focus in computing. Machine learning algorithms and neural networks enable computers to perform complex tasks, from image recognition to natural language processing. AI's rapid advancements are poised to redefine industries and enhance human capabilities.

### Graph Suggestion: AI Investment Growth

*A bar graph illustrating the increase in investments in AI technologies over the past decade.*

---

## 10. The Future of Computing

As we look to the future, quantum computing represents the next frontier in computing technology. Quantum computers leverage the principles of quantum mechanics to process information in ways traditional computers cannot. This emerging field has the potential to revolutionize problem-solving across various domains.

### Image Suggestion: Quantum Computer Diagram

*A diagram illustrating the basic principles of quantum computing, such as qubits and superposition.*

---

## Conclusion

The history of computers is a testament to human creativity and the relentless pursuit of knowledge. From the early days of the abacus to the cutting-edge developments in quantum computing, each advancement has paved the way for the next. As we continue to innovate, the possibilities for the future of computing are boundless.

---

This article serves as a comprehensive overview of computer history and can be supplemented with the suggested images and graphs to enhance visual appeal and engagement. Let me know if you need any more details or specific information!

No comments:

Post a Comment